Artificial intelligence is no longer something healthcare and life sciences teams are testing on the side. For healthcare and life sciences organizations, the question is how teams can adopt new capabilities without sacrificing rigor, privacy and trust.

The latest narratives highlight three forces shaping this next phase of AI: agentic AI, physical AI and sovereign AI.1 They point to a future where intelligence is more autonomous and embedded in real-world environments and perhaps more tightly governed.

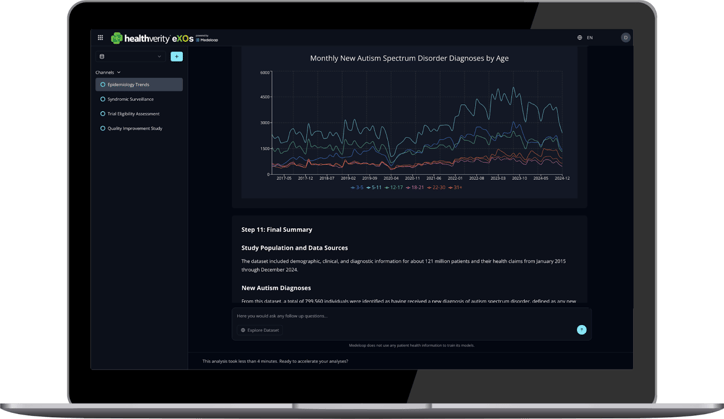

At HealthVerity, we are already seeing what this looks like in practice. With HealthVerity eXOs, we are applying agentic AI to one of healthcare’s most persistent challenges: turning complex, multi-source claims data into defensible evidence. Instead of replacing expertise, agentic AI augments it, handling analytical execution while keeping logic visible and auditable.2

As data volumes grow and decision cycles shorten, organizations need AI that accelerates insight while operating within strict privacy and governance guardrails. Below, we explore how agentic AI, physical AI and sovereign AI are evolving and what early signals suggest as we look toward 2026.

Agentic AI in healthcare: from pilots to real-world analytics

Agentic AI refers to autonomous systems that can plan, adapt and act across multi-step processes. Instead of supporting a single task, these agents can collaborate with other agents and with humans to move work forward.

For healthcare and life sciences teams, the appeal is that agentic AI has the potential to reduce manual effort across complex workflows, from evidence generation to real-world monitoring, while freeing experts to focus on interpretation and decision making.

An example of agentic AI like HealthVerity eXOs answering a researcher’s plain-English question about Autism diagnosis by age by pulling information from a database of real-world closed claims data.2

Current adoption of agentic AI in healthcare and life sciences

Most organizations are still early. Deloitte’s research shows that agentic AI deployments largely remain in pilot phases, with broader adoption concentrated among larger, technology forward enterprises.1 At the same time, sentiment is changing and nearly half of LinkedIn respondents expect autonomous agents to meaningfully change their organizations within the next two to three years.1

Early predictions for 2026

- Pilots move into production. As new agentic solutions mature, adoption is likely to expand beyond experimental use cases.

- Governance takes a stance. Autonomous systems raise new questions around accountability, validation and oversight that organizations can no longer defer.

- New roles emerge. Expect increased investment in upskilling, including teams focused on monitoring, training and governing AI agents.

Physical AI and its impact on real-world healthcare operations

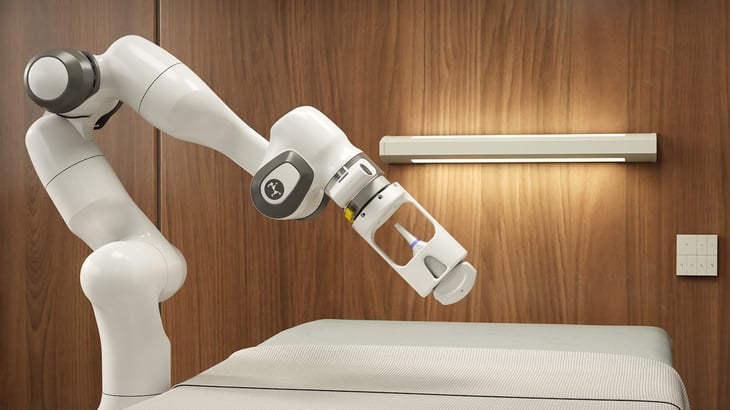

Physical AI refers to artificial intelligence systems that sense, interpret and act in the physical world, rather than operating solely in software. It combines AI with hardware such as sensors, medical devices, robotics and connected infrastructure so systems can respond to real-world conditions in real time.

In healthcare, this often shows up where data meets movement, environment or equipment. Examples include smart infusion pumps that adjust dosing, hospital robots that move supplies, autonomous X-ray technologies and ultrasound applications3, wearable devices that monitor patients continuously or logistics systems that adapt to real-time demand. The value proposition lies in applying intelligence where manual processes have traditionally limited scale, safety or speed.

An example of physical AI in action: NVIDIA announced a collaboration with GE HealthCare to advance autonomous imaging, including work on autonomous X-ray technologies and ultrasound applications.3

Current impact of physical AI in healthcare settings

Adoption expectations are more measured. Many AI leaders anticipate only minimal to moderate use in the near term, citing barriers such as hardware costs, safety requirements and regulatory complexity. Public sentiment is more mixed, with over half of LinkedIn respondents expecting moderate to significant operational impact in the next few years.

Early predictions for 2026

- Targeted adoption. Asset-heavy sectors like manufacturing, logistics and healthcare are likely to see faster uptake where return on investment is clear.

- Heightened focus on safety and security. Physical safeguards, cyber protections and auditability will be essential as AI interacts directly with people and assets.

- Better human-machine collaboration. As interfaces improve, teams will learn how to work alongside physical AI systems in more integrated ways.

Sovereign AI and the future of healthcare data governance

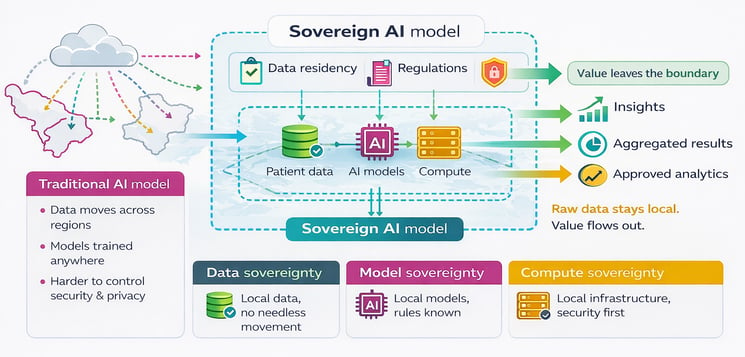

Sovereign AI focuses on the idea that nations should develop and maintain independent AI capabilities rather than rely on systems controlled by foreign entities or multinational corporations.4 In practical terms, it means organizations can decide where sensitive data is stored, where models are trained and where AI workloads run, rather than defaulting to globally distributed infrastructure. For regulated industries, this is less of a theoretical concern and more of a strategic requirement.

Healthcare and life sciences organizations operate under strict privacy and data residency rules. Sovereign AI approaches help manage regulatory risk while building confidence with patients, partners and regulators.

Sovereign AI lets organizations use AI without moving sensitive healthcare data outside required local or regional boundaries.4

Why healthcare organizations are prioritizing sovereign AI

Across industries, AI leaders view data residency and compute constraints as increasingly important. The urgency is highest in regulated sectors like healthcare, life sciences, financial services and the public sector. Broader sentiment mirrors this view, shaped by ongoing regulatory developments and high-profile data security incidents.

Early predictions for 2026

- Greater regulatory scrutiny. Organizations should expect continued expansion of data privacy and AI governance requirements globally.

- Growing demand for compliant solutions. Multi-cloud and edge strategies will become more common as teams localize data and compute.

- Emergence of regional AI hubs. Governments and regions are likely to invest in local ecosystems to reduce reliance on external providers.

What AI trends in 2026 mean for healthcare leaders

Agentic AI, physical AI and sovereign AI signal a shift in how artificial intelligence will be used across healthcare and life sciences. AI is taking on more responsibility, moving closer to real-world operations and operating under stricter expectations for transparency, control and compliance.

Progress will favor organizations that build on solid foundations. Autonomous systems still need explainable logic. AI embedded in physical settings still requires safety and oversight. Sovereign approaches still depend on privacy preserving design and scalable governance.

As these trends mature, they will continue to influence how evidence supports development, market access and post launch decision making. The challenge ahead is not whether AI belongs in life sciences, but how to deploy it in a way that maintains confidence in the data, the methods and the conclusions drawn from them.

Explore our approach to AI-driven evidence with HealthVerity eXOs below:

References

- Three new AI breakthroughs shaping 2026: AI trends | Deloitte US. https://www.deloitte.com/us/en/what-we-do/capabilities/applied-artificial-intelligence/blogs/pulse-check-series-latest-ai-developments/new-ai-breakthroughs-ai-trends.html

- HealthVerity eXOs. HealthVerity. https://healthverity.com/exos/

- Nvidia and ge healthcare collaborate to advance the development of autonomous diagnostic imaging with physical AI. NVIDIA Newsroom. https://nvidianews.nvidia.com/news/nvidia-and-ge-healthcare-collaborate-to-advance-the-development-of-autonomous-diagnostic-imaging-with-physical-ai

- Dale R. Sovereign AI in 2025. Natural Language Processing. 2025;31(5):1312-1321. doi:10.1017/nlp.2025.10007